Continuously enhance and assess Chatbot maturity via crowdsourced testing and training!

In this era of AI/ML where artificial intelligence is fast replacing human interventions in multiple situations, Chatbots are emerging as one of the most essential applications providing that first line of customer experience to the end-users. Many of us are already interacting with such bots daily while interacting with our online banks, eCommerce suppliers, or food delivery portals.

For the uninitiated, a chatbot or virtual assistant is an AI-based software application that is designed to simulate text/speech conversation between machines and humans through natural language. A Chatbot is hence expected to respond like a human and answer all the queries of a user intelligently, and in an ideal situation without letting the user feel if the responses are manual or automated. Chatbots identify the user’s intent based on the analysis of the user’s request. Once the intent is identified, the chatbot provides the relevant response based on its learning or maturity. The intent is the objective or purpose of the user’s input to the chatbot. Being the initial response layer for the end-user, these Chatbots are extremely critical in building or breaking the end-user connection with the brand and driving their loyalty.

Understanding the Scope of Chatbot Testing – Maturity Assessment

Unlike typical software application testing, Chatbot testing is not just about assessing the functionality of the app, but more about assessing the maturity of the chatbot via its responses to various situations.

Any Chatbot testing plan needs to cover the following four categories of assessment:

- Functional accuracy: features, navigational flows, compatibility, performance accuracy

- Response to in-scope intents: its maturity to provide relevant responses to various intents (in-scope activities) when asked directly or indirectly

- Response to out of scope un-intended situations: how it handles lexical challenges, interruptions, its intelligence to respond smartly to untrained queries

- Continuous learning via data samples: capability of Chatbot to gain maturity by continuous self-learning by feeding of more and more input data samples

Further, for any Chatbot app, there are two key aspects that need to be considered while planning their testing. First, the conversational capability of the chatbot, and secondly the degree of intelligence an end-user would expect from it.

In-depth Chatbot testing on the four categories mentioned earlier is necessary to ensure an effective and efficient Chatbot application. To offer an outstanding conversational experience to the user, there are key characteristics like functionality, interface, personality, and conversational intelligence that needs to be evaluated while testing. Moreover, a Chatbot maturity model allowing continuous learning via data samples is key to delivering an advanced Chatbot application. The only way to achieve this is through intense testing and training that will make the Chatbots highly effective and mature.

Key Challenges in Performing Chatbot Testing:

- Difficulty to predict diverse scenarios while responding to the intents: Predicting the exact response the user is expecting, for the specific intent or question is challenging, as it depends on the user’s interactions. Understanding intent is important for creating an interactive chatbot experience. Chatbot training depends on how well the chatbot responds to the conversation around the intents.

- Misunderstanding due to lack of understanding of natural language: Most of the chatbots are not able to understand and adapt the language to match the human’s intent of asking questions like slang, spellings, sarcasm, etc…

- Non-emotional response: Chatbots usually do not respond to emotions, especially when a user is having any issue with a product, users express their emotions and frustration. This may not give the appropriate customer experience and turn off the user

- UX and compatibility on different platforms: The user experience due to the non-compatibility of Chatbot may differ from platform to platform. User experience can be achieved up to some extent but compatibility on different platforms is a challenging task.

Performance of chatbot when there is an increased number of intents: The performance gets affected when multiple users are interacting with an increased number of intents.

Oprimes Chatbot Testing Framework – Powered by Crowdsourcing

The assessment and enhancement of Chatbot maturity require us to validate it from a diverse perspective and also provide it with different types of learning samples. Such an exercise gives the best outcome by combining the automated and crowdsourcing techniques. While the automated method allows large volumes of learning sample data, assessing Chatbot maturity purely via automation poses a big risk of automation bias which affects the accuracy of a Chatbot, as you are using a machine to assess an outcome of another machine. It might have worked if your users were bots :), but users are humans filled with emotions and full of unpredictability. Hence, your assessment method should replicate that condition. Crowdsourcing is the most effective method to assess any Chatbot’s maturity and also helps in creating unique learning samples to fast-pace its maturity growth.

Let’s take a simple example of a Chatbot for an Ed-tech platform to understand the complexity of Chatbot testing. This Chatbot intends to provide information about the educational programs and its course curriculum offered by the platform. If a user wishes to inquire about the list of courses from the Chatbot, the user can give input to the bot in so many different ways.

“I would like to know the list of available courses”

“May I have a course list”

“Courses list please”

“What are the different courses running”

There could be innumerable different ways of drafting the sentence for the same intent – structure constructions, syntaxes, grammatical errors, etc. And then there could be so many different yet related intents for the same topic as well.

The higher number of learning samples we provide to train the Chatbot helps you create a more mature Chatbot that can handle any number of queries for the intent by providing relevant responses and a better user experience.

The crowdsourcing model allows us to access large numbers of test users operating under diverse yet real-world conditions. They interact with Chatbot in both structured and exploratory fashion creating diverse permutations of one or more intents. This not only assesses the Chatbot more holistically but also provides different learning samples to enhance its maturity much faster.

With Oprimes crowdsourced Chatbot testing solution, we can perform conversational testing, exploratory testing, usability testing, and study to assess the readiness and maturity of the chatbot.

Oprimes Chatbot testing framework

The key objective is to assess the AI-powered Chatbot maturity by its relevant response, how it handles interruptions, Intelligence, answering, understanding, response time and lexical, etc. Also, improve Chatbot accuracy by creating more and more learning samples for its training.

We at Oprimes have defined a ready-to-use framework that provides 360-degree coverage to a Chatbot on its functional, conversational, usability, and maturity aspect.

Multi-Dimensional Chatbot Testing Framework:

1. Structured/ Conversational testing – Assessed the happy flow for the defined intent of the chatbot.

2. Exploratory testing – Testers posed multiple questions to chatbots on each intent to assess behavior, relevance, and maturity.

3. Multi-device testing – Functional compatibility testing on unique multi-device configuration and demography.

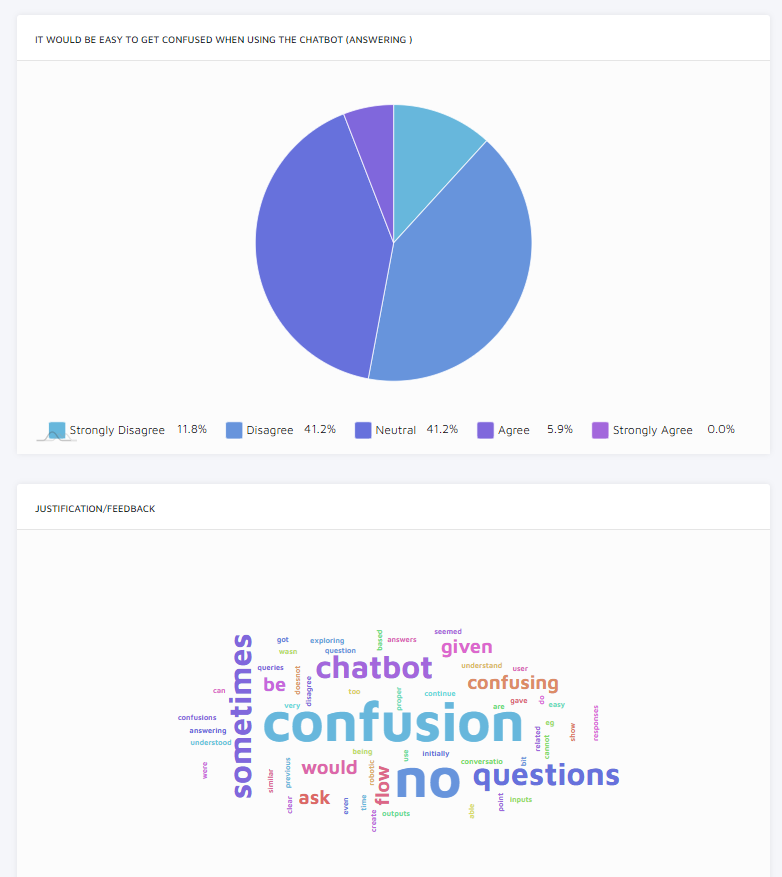

4. Usability survey and assessment with defined target pool to assess UX. Usability study and questions were defined based on the below key parameters.

| Key Parameters | Descriptions |

|---|---|

| Intelligence | Is a chatbot intelligent enough to understand the context, is it able to remember things, uses and manages context as a person for intent |

| Error Management | Is a chatbot good at troubleshooting and dealing with all the errors that are going to happen |

| Navigation and Interface | Is the conversation flow well-structure and easy for the user to find the necessary information in the discussion |

| Answering | Are chatbot’s responses relevant to the user’s questions and are its answers context relevant and accurate enough |

| Understanding | What kind of languages items (humour, idioms, slang, requests, small talk, idioms, emojis) does a chatbot understand. |

| Onboarding | It is easy to understand how to use a chatbot from the very start of the conversation |

| Personality | Does a chatbot have the name, voice, tone? Does its tone suit your audience and the nature of the ongoing conversation? |

| Response time | Customers want fast responses, faster responses can keep customers more engaged with bots. |

Testing Approach and Outcome

O-primes is India’s largest crowdtesting platform that comprises a testing community of thousands of testers, test users spread in 120 countries. It also has intelligent workflows for tester selection, test case management, survey, automated analytics and reporting, and much more.

A team of test-users and selective domain experts is handpicked from the O-primes community and configured on the platform. A Project manager aligns with the Chatbot Business and Development team to design the testing plan including the key parameters, intents, evaluation tree, etc.

Test-users are divided into different focus groups, some are provided guidelines and a tree structure to follow specific intents in different ways to break the Chatbot flow. Another focus group is asked to perform an exploratory assessment of the Chatbot around the topic in scope and out of scope. Professional test experts and domain experts are added on top to observe the functional and content accuracy as well.

The test users provide their conversational feedback, raise issues, usability feedback, and also generate different combinations of learning samples which add to the database of the Chatbot. All these deliverables are summarized by our experts and converted into Chatbot maturity ratings based on our evaluation model. Subjective feedback is also converted into actionable recommendations for the business and development team to work on.

Let’s take an example here – 1 intent, 50 test users to pose 10 different questions each and assess the chatbot. With this, we get at least 500 learning samples for chatbot training on specific intent and also domain validation. The same activity is conducted for all the intents that the chatbot is designed with.

Learning samples enhances the chatbot training to provide a relevant response, error handling, lexical, and conversation intelligence with better conversational experience, whereas multi-device, exploratory and usability testing helps improve the chatbot compatibility, stability, and user experience.

O-primes is one of the largest crowdtesting platforms in the APAC region. We are trusted by some of the leading enterprise and growth stage startup firms to achieve seamless Quality and UX assurance for their applications across Chatbot, OTT, Cloud, Media, Hi-tech, Internet, M-health, and many other domains. O-primes is helping more than 20 firms in delivering a mature Chatbot testing solution to their users, and also partners with many Chatbot development agencies in building an intelligent solution for their customers.

Written by Shalini Raghunath